Research Projects

Neurocomputational basis of compositionality

Neural, behavioral and computational basis of invariant object recognition

Neurocomputational basis of the acquisition of “abstract task knowledge”

My research goal is to understand the neurocomputational basis of acquiring abstract task knowledge. At a mechanistic level, how is the brain able to grasp overarching principles, patterns, and relationships that generalize across diverse behavioral contexts? For example, upon visiting a new country, one can readily generalize the check-out rules of a single restaurant to the other restaurants of that same country. Although this capacity for generalization is considered a key ingredient of intelligent behavior and a missing element in artificial neural networks to emulate human-like performance, its neural underpinning remains unknown. To address this gap, my future research program lies at the intersection of neuroscience and artificial intelligence (AI). By discovering the brain’s mechanisms for acquiring abstract knowledge, we will inspire the development of new algorithms for general-purpose, flexible AI systems, which, in turn, provide deeper insights into brain function.

I have taken multidisciplinary approach that involves complex behavioral tasks, simultaneous cortex-wide imaging, electrophysiology, causal manipulations, and computational modeling. I have also incorporated methods from geometry and topology to discover a meta-level algorithmic language that can integrate computations in multiple scales of brain operation: neural activity, circuits, and behavior. This pursuit will contribute to a comprehensive and mechanistic understanding of the neural substrates underpinning abstract knowledge. Ultimately, I intend to utilize this foundational understanding as a basis for devising cutting- edge brain stimulation techniques that help alleviate symptoms of brain disorders.

Created by DALL.E

I then simultaneously recorded from prefrontal (Fig. 1a, LPFC, FEF), parietal (LIP), basal ganglia (Striatum) and inferior temporal cortex (IT) and found that tasks that use shared sub-tasks (e.g., color categorization sub-task) also engage shared neural representation subspaces (Fig. 1b). Moreover, as monkeys flexibly transitioned between tasks, the degree of shared subspace engagement was linked to the monkeys’ internal belief in the current task state. Importantly, changes in the geometry of the representation allowed this flexibility: compression of representation selectively enhanced/suppressed task relevant/irrelevant feature representations (Fig. 1c,d).

In sum, these findings elucidate the brain's ability to seamlessly compose tasks by combining sub-task-specific elements and offer insights into flexible cognitive processes that could inform both neural and artificial network models (Tafazoli et al., Nature, 2025)

Relevant publication(s):

1- Tafazoli, S., Bouchacourt, F., Ardalan, A., Markov N.T., Uchimura, M., Mattar, M.G., Daw, N.D, Buschman T.J. “Building Compositional Tasks with Shared Neural Subspaces”(2025), Nature, Nov 26. https://doi.org/10.1038/s41586-025-09805-2

2- Bouchacourt, F.*, Tafazoli, S.*, Mattar, M.G., Buschman, T.J., and Daw, N.D. (2022). “Fast rule switching and slow rule updating in a perceptual categorization task”. eLife 11, e82531. https://elifesciences.org/articles/82531

3- MacDowell, C.J., Tafazoli, S., and Buschman, T.J. (2022). “A Goldilocks theory of cognitive control: Balancing precision and efficiency with low-dimensional control states”. Current Opinion in Neurobiology 76, 102606. https://www.sciencedirect.com/science/article/pii/S0959438822001003

Created by DALL.E 3

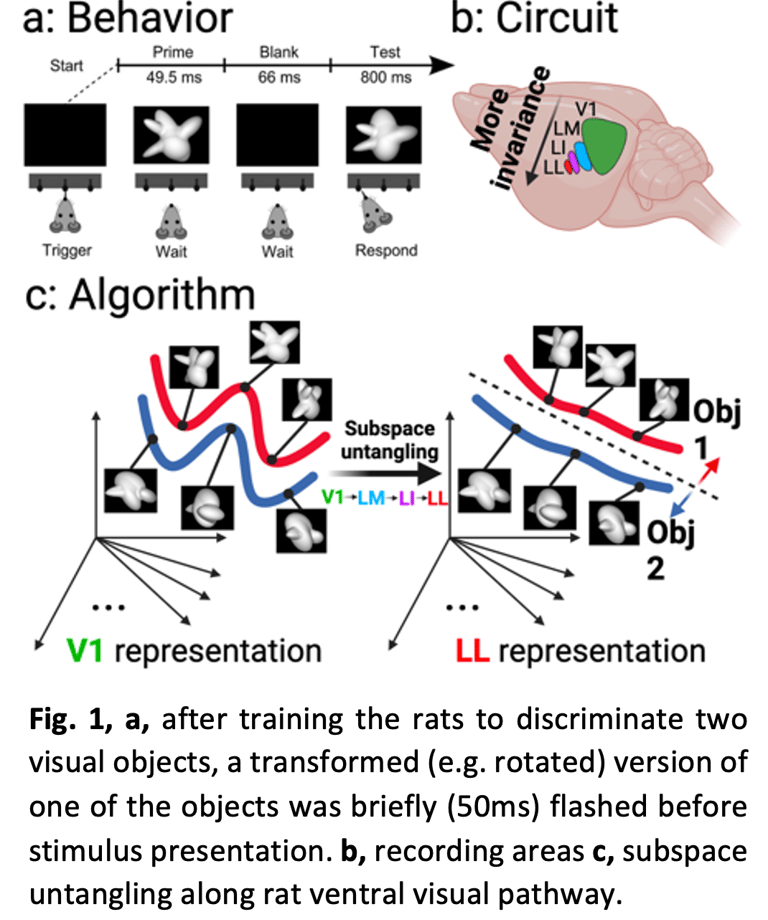

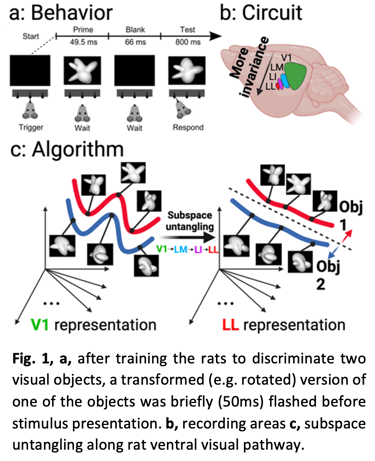

To understand how the brain represents abstract knowledge at the perceptual level, during my Ph.D. I performed a series of experiments to study the behavioral and neurocomputational basis of Invariant Object Recognition (IOR) in rats. IOR is the ability to distill diverse object representations into an abstract representation despite variation in object appearance (e.g. size, or viewpoint). First, using a novel behavioral task based on visual priming, I demonstrated rats can perform invariant object recognition (Fig. 1a, Tafazoli et al., J. Neuroscience, 2012).

I then recorded from four visual areas in rats (Fig. 1b, V1, LM, LI, and LL) and found that neurons along these hierarchically organized regions become progressively tuned to more complex visual object attributes while becoming more view-invariant. At the population level, these representations become more linearly separable, suggesting a reformatting of representations from tangled in low-level areas such as V1 to untangled in higher-level areas such as LL (Fig. 1c, Tafazoli et al., eLife, 2017). Later, we found a similar reformatting of representations in deep neural networks (Muratore, Tafazoli, et al., NeurIPS, 2022).

Overall, this series of works suggests successive transformation of geometry of neural representations enables the emergence of abstract object representations in both biological and artificial neural networks.

Relevant publication(s):

1- Tafazoli, S.*, Di Filippo, A.*, and Zoccolan, D. (2012). “Transformation-Tolerant Object Recognition in Rats Revealed by Visual Priming”. Journal of Neuroscience 32, 21–34. (Highlighted as featured article of Journal of Neuroscience) https://www.jneurosci.org/content/32/1/21

2- Tafazoli, S.*, Safaai, H.*, De Franceschi, G., Rosselli, F.B., Vanzella, W., Riggi, M., Buffolo, F., Panzeri, S., and Zoccolan, D. (2017).” Emergence of transformation-tolerant representations of visual objects in rat lateral extrastriate cortex”. eLife 6, e22794. (Highlighted as featured article of eLife) https://doi.org/10.7554/eLife.22794

3- Muratore, P., Tafazoli, S., Piasini, E., Laio, A., and Zoccolan, D. (2022). “Prune and distill: similar reformatting of image information along rat visual cortex and deep neural networks”. NeurIPS 2022. http://arxiv.org/abs/2205.13816

Cognition is remarkably flexible, able to rapidly learn new tasks and switch between tasks. Theoretical modeling suggests this flexibility may be due, in part, to the brain’s ability to compositionally re-use existing task representations to build novel task representations.

To study the neural mechanisms supporting compositionality, I trained monkeys to perform, and switch between three compositionally-related tasks. Each task required the animal to discriminate one of two features of a stimulus (shape or color) and then respond with an associated eye movement along one of two response axes (Axis 1 or Axis 2). In collaboration with Nathaniel Daw’s group, we found that animals perform this task by simultaneously using prior task structure knowledge (modeled with Bayesian inference) and trial-and-error learning (modeled with Q-learning, Bouchacourt*, Tafazoli* et al., Elife, 2022).

Contact

Connect

tafazoli@princeton.edu

© 2025. All rights reserved.